Multiple processes need to be followed before a software solution is deemed ready for the market. Performance testing is one of the most crucial among these processes.

Software performance testing is used to test how software performs in terms of stability, speed, and responsiveness when it is under a workload. There are multiple types of performance testing that a software system has to go through before it can hit the market.

Here’s a comprehensive guide with everything you need to know about software performance testing.

Table of Contents

Importance of Software Performance Testing

Software performance testing plays a crucial role in ensuring the reliability, stability, and efficiency of our applications. Here are some key reasons why it’s essential.

User Experience Enhancement

Performance testing helps in identifying and resolving issues related to speed, responsiveness, and overall user experience. By simulating real-world usage scenarios, we can ensure that our software meets the performance expectations of our users, leading to higher satisfaction and retention rates.

Scalability Assessment

As our user base grows or as we introduce new features, it’s vital to assess how well our software can scale to accommodate increased loads. Performance testing allows us to understand the system’s behavior under various levels of stress and helps in optimizing scalability to support future growth.

Cost Reduction

Identifying performance bottlenecks early in the development cycle can significantly reduce the cost of fixing issues later. Performance testing enables us to pinpoint areas of inefficiency and address them proactively, minimizing the risk of costly downtime or negative impact on business operations.

Reliability and Stability

High-performing software is synonymous with reliability and stability. Through rigorous performance testing, we can detect and eliminate potential sources of crashes, hangs, or failures, ensuring that our applications operate smoothly under all conditions.

Competitive Advantage

In today’s competitive market, users have high expectations for software performance. By consistently delivering high-quality, well-performing applications, we can differentiate ourselves from competitors and maintain a strong market position.

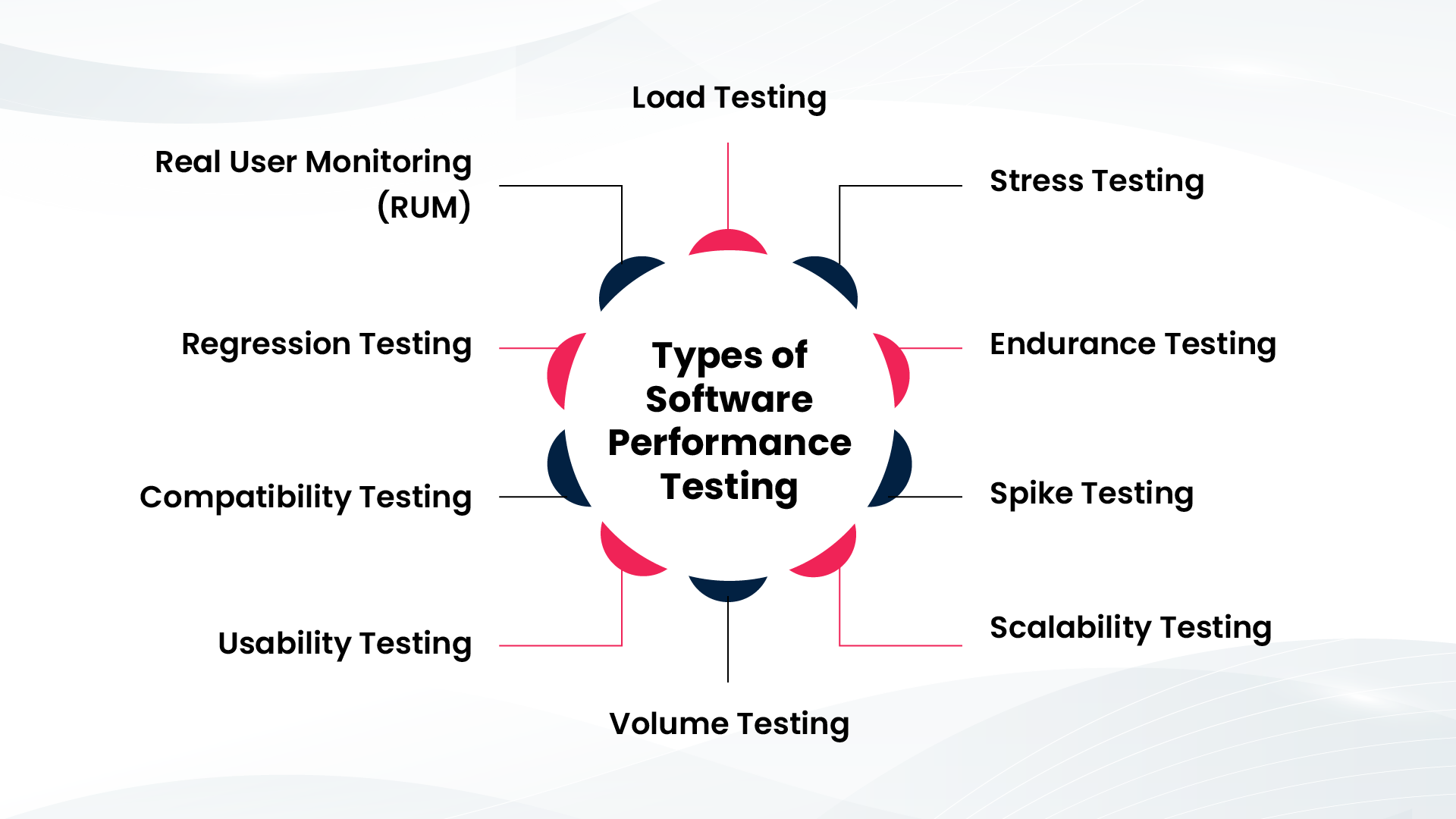

Types of Software Performance Testing

Let’s delve into some of the key types of software performance testing:

1. Load Testing

1. Load Testing

Load testing involves simulating realistic user behavior to assess how the system performs under expected loads. By subjecting the software to typical usage scenarios, developers can identify performance bottlenecks and optimize resource allocation for optimal performance.

2. Stress Testing

Stress testing takes the software beyond its limits by applying heavy loads or stressing resources to their maximum capacity. This type of testing helps uncover vulnerabilities and assess how the system behaves under extreme conditions such as sudden spikes in traffic or resource constraints.

3. Endurance Testing

Endurance testing, also known as soak testing, evaluates the system’s performance over an extended period under sustained load. By monitoring for memory leaks, resource utilization, and system stability over time, developers can ensure the software maintains its performance without degradation during prolonged usage.

4. Spike Testing

Spike testing assesses the system’s ability to handle sudden and significant increases in traffic or workload. By simulating abrupt spikes in user activity, developers can gauge how well the software scales and whether it can handle rapid fluctuations in demand without compromising performance.

5. Scalability Testing

Scalability testing focuses on evaluating how well the system can accommodate growing or changing demands by increasing resources or adding components. By measuring performance metrics under varying loads, developers can determine scalability limits and identify optimization opportunities.

6. Volume Testing

Volume testing assesses how the software handles large amounts of data, ensuring its performance and scalability. By simulating scenarios with extensive datasets or high transaction volumes, developers can identify and optimize data-handling processes to maintain responsiveness and efficiency.

This testing is critical for applications dealing with large datasets or high transaction volumes, ensuring a reliable user experience under real-world usage conditions.

7. Usability Testing

Usability testing focuses on evaluating the software from the end user’s perspective, with an emphasis on user interface (UI) and user experience (UX). While not traditionally considered a performance test, usability testing plays a crucial role in ensuring that the software performs well and is intuitive and easy to use.

By observing how users interact with the software and gathering feedback, developers can identify areas for improvement to enhance overall user satisfaction.

8. Compatibility Testing

Compatibility testing ensures that the software functions correctly across different platforms, devices, browsers, and operating systems. While not directly related to performance, compatibility issues can impact the software’s overall usability and accessibility. By conducting compatibility testing, developers can identify and address any compatibility issues early in the development process, ensuring a seamless user experience across various environments.

9. Regression Testing

Regression testing involves retesting the software after making changes or updates to ensure that new code does not adversely affect existing functionality. While primarily focused on ensuring software stability, regression testing also indirectly contributes to performance by verifying that performance optimizations made in previous iterations are still effective.

By automating regression testing where possible, developers can quickly identify and fix any performance regressions introduced during the development cycle.

10. Real User Monitoring (RUM)

Real user monitoring involves monitoring and analyzing the performance of the software in real time based on data collected from actual user interactions. By tracking metrics such as page load times, transaction times, and error rates in production environments, developers can gain valuable insights into how users experience the software in the wild.

This real-world feedback can inform future performance optimizations and ensure that the software continues to meet user expectations over time.

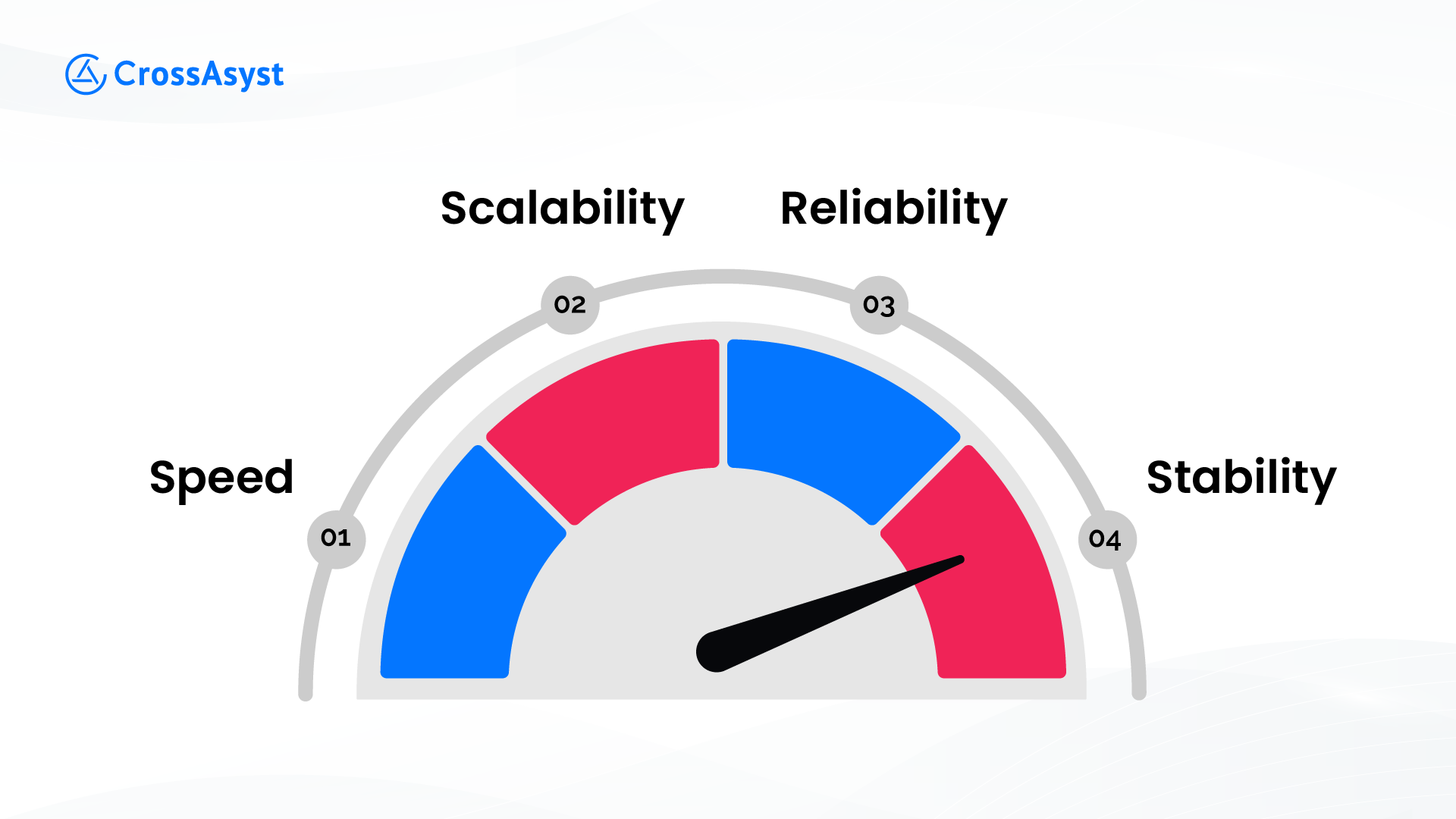

Attributes of Software Performance Testing

Let’s now take a look at the attributes of software performance testing. Incorporating these attributes into software performance testing not only ensures the quality and reliability of the application but also enhances user satisfaction and fosters trust in the software’s capabilities.

1. Speed

Speed, perhaps the most visible aspect of performance, directly impacts user experience. Performance testing evaluates how quickly the software responds to user actions or system events. By measuring response times, throughput, and latency, developers can identify opportunities to optimize code, improve algorithms, or enhance resource utilization, ultimately delivering a faster and more responsive application.

2. Scalability

Scalability measures the ability of the software to handle increasing workloads or growing user bases without sacrificing performance. Performance testing assesses how well the application scales both vertically (by adding more resources to a single component) and horizontally (by adding more instances or nodes).

By testing scalability, developers can ensure that the software can accommodate future growth seamlessly, maintaining optimal performance levels as demand increases.

3. Reliability

Reliability is paramount in ensuring that the software performs consistently under varying conditions. Performance testing evaluates the system’s ability to execute tasks accurately and reliably over time.

By identifying and addressing issues such as memory leaks, race conditions, or resource contention, developers can enhance the reliability of the software, minimizing the risk of crashes, errors, or unexpected behavior.

4. Stability

Stability refers to the software’s ability to maintain consistent performance levels without failure or degradation over extended periods. Performance testing assesses the system’s resilience to stress, load, and environmental factors.

By subjecting the software to rigorous testing under different scenarios, developers can identify stability issues such as memory leaks, deadlock situations, or system crashes. Addressing these issues ensures that the software remains stable and robust under real-world usage conditions.

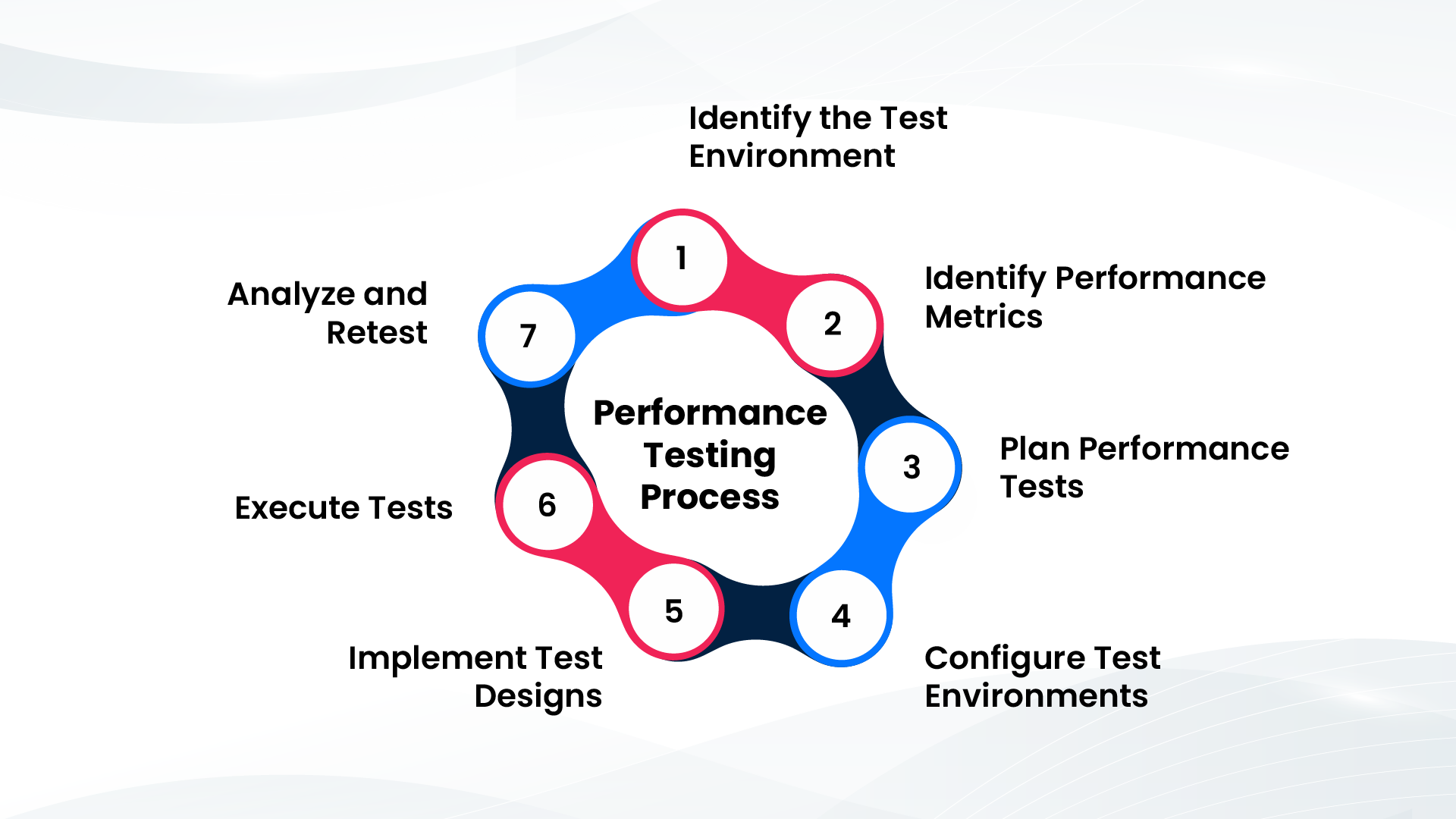

The Software Performance Testing Process

Now that we know the importance of performance testing software solutions, and the types of performance testing that a software product needs to go through, let’s discuss the testing process itself.

Now that we know the importance of performance testing software solutions, and the types of performance testing that a software product needs to go through, let’s discuss the testing process itself.

The software performance testing process can be broken down into 7 steps. Here’s a quick look at each of them.

1. Identify the Test Environment

The first step in performance testing is to identify the test environment accurately. This involves understanding the infrastructure, hardware, software, network configuration, and other relevant factors that will impact the performance of the application.

By creating a test environment that closely mirrors the production environment, we can ensure realistic and meaningful performance testing results.

2. Identify Performance Metrics

Next, it’s essential to define clear and measurable performance metrics that align with the goals and objectives of the application. These metrics may include response time, throughput, CPU utilization, memory usage, error rates, and more.

By identifying key performance indicators (KPIs), we can effectively gauge the performance of the application and track improvements over time.

3. Plan Performance Tests

With the test environment and performance metrics in place, the next step is to plan the performance tests. This involves defining test scenarios, user profiles, workload models, and test scripts.

Test scenarios should cover a range of use cases, including typical user interactions, peak load scenarios, and stress testing scenarios. A well-thought-out test plan ensures comprehensive coverage of the application’s performance characteristics.

4. Configure Test Environments

Once the test plan is finalized, it’s time to configure the test environment accordingly. This may involve setting up test servers, databases, network configurations, and other necessary infrastructure components.

It’s crucial to ensure that the test environment is stable, consistent, and representative of the production environment to obtain accurate performance testing results.

5. Implement Test Designs

With the test environment configured, the next step is to implement the test designs and develop the necessary test scripts or automation frameworks. This may involve using performance testing tools to simulate user interactions, generate load, and collect performance metrics. Test designs should be executed systematically, following the predefined test scenarios and workload models.

6. Execute Tests

Once the test designs are implemented, it’s time to execute the performance tests. This involves running the test scripts, simulating user interactions, and generating load on the application under test. During test execution, it’s essential to monitor key performance metrics in real-time and collect relevant performance data for analysis.

7. Analyze and Retest

After completing the performance tests, the final step is to analyze the results and identify any performance issues or bottlenecks. This may involve correlating performance metrics, identifying trends, and pinpointing areas for optimization.

Based on the analysis, necessary adjustments can be made to improve the performance of the application. It’s also essential to retest the application after implementing changes to validate improvements and ensure that performance goals are met.

Software Performance Testing Measurable Metrics

Let’s now take a look at the key measurable metrics that play an important role in software performance testing. By monitoring and analyzing these measurable metrics during software performance testing, developers can gain valuable insights into the performance characteristics of the application and make informed decisions to optimize performance, enhance user experience, and ensure the success of the software product.

1. Response Time

Response time refers to the time taken for the application to respond to a user’s request. It includes the time required for processing the request, executing the necessary operations, and returning the response to the user. Monitoring response time helps assess the overall responsiveness of the application and identify any bottlenecks or performance issues.

2. Wait Time

Wait time represents the duration that users spend waiting for a response from the application. It includes any delay experienced by users due to server processing, network latency, or other factors. Minimizing wait time is essential for enhancing user experience and ensuring optimal performance.

3. Average Load Time

Average load time measures the average time taken for web pages, resources, or transactions to load completely. It provides insights into the overall speed and efficiency of the application from the user’s perspective. Optimizing average load time can significantly improve user satisfaction and retention rates.

4. Peak Response Time

Peak response time indicates the maximum time taken for the application to respond to a request during periods of peak load or high concurrency. It helps identify performance bottlenecks and ensures that the application maintains acceptable response times even under heavy traffic conditions.

5. Error Rate

Error rate quantifies the frequency of errors or failures encountered by users while interacting with the application. It includes HTTP error codes, server errors, timeouts, and other types of errors. Monitoring error rate helps identify potential issues and prioritize bug fixes or optimizations to enhance reliability and user experience.

6. Concurrent Users

Concurrent users represent the number of users accessing the application simultaneously. Monitoring concurrent users helps assess the application’s ability to handle concurrent sessions and concurrent transactions effectively. It provides insights into scalability requirements and helps optimize resource allocation to support growing user bases.

7. Requests per Second

Requests per second (RPS) measure the rate at which the application processes incoming requests or transactions. It reflects the application’s throughput capacity and helps identify performance limitations under various load conditions. Optimizing RPS ensures that the application can handle increasing workloads without performance degradation.

8. Transaction Status

Transaction status indicates the success or failure of individual transactions or user interactions within the application. Monitoring transaction status helps identify functional defects, performance bottlenecks, or integration issues that may impact the user experience. It ensures that critical transactions are executed successfully and reliably.

9. Throughput

Throughput measures the rate at which the application processes and delivers data or transactions. It represents the overall capacity or bandwidth of the system and is often measured in terms of bytes per second or transactions per second. Monitoring throughput helps optimize system resources and ensure efficient data processing and delivery.

10. CPU Utilization

CPU utilization quantifies the percentage of CPU capacity utilized by the application during testing. It provides insights into the application’s resource usage and helps identify CPU-bound performance bottlenecks. Optimizing CPU utilization ensures efficient resource allocation and improves overall system performance.

11. Memory Utilization

Memory utilization measures the amount of memory consumed by the application during testing. It includes both physical and virtual memory usage and helps identify memory leaks, excessive memory consumption, or inefficient memory management practices. Optimizing memory utilization ensures optimal performance and stability of the application.

Software Performance Testing Best Practices

Here are some software performance testing best practices that can help ensure the effectiveness and efficiency of your testing efforts.

1. Early Integration

1. Early Integration

Integrate performance testing into the software development lifecycle from the early stages of development. By identifying and addressing performance issues early on, you can prevent costly rework and ensure that performance considerations are prioritized throughout the development process.

2. Define Clear Objectives

Clearly define the goals and objectives of your performance testing efforts. Determine what aspects of performance you want to evaluate, such as response time, throughput, scalability, or reliability. Having clear objectives will guide your testing approach and help you focus on what matters most to your application and users.

3. Realistic Test Scenarios

Develop realistic test scenarios that simulate actual usage patterns and workload conditions. Consider factors such as user behavior, data volumes, transaction volumes, and concurrency levels. Realistic test scenarios will provide more meaningful insights into how your application performs under real-world conditions.

4. Use Production-Like Environment

Test in an environment that closely resembles the production environment in terms of infrastructure, hardware, software, network configuration, and data. This ensures that your performance testing results accurately reflect how the application will perform in the real world.

5. Automate Testing Where Possible

Automate performance testing processes and tasks to increase efficiency, repeatability, and accuracy. Use performance testing tools to automate test script generation, test execution, and result analysis. Automation allows you to run tests more frequently, identify performance regressions quickly, and free up resources for more complex testing tasks.

6. Monitor Key Metrics

Monitor key performance metrics such as response time, throughput, error rate, CPU utilization, and memory utilization during testing. These metrics provide insights into the performance characteristics of your application and help you identify performance bottlenecks and areas for optimization.

7. Baseline Performance

Establish baseline performance metrics for your application under normal operating conditions. Baseline metrics serve as a point of reference for future performance testing efforts and help you track performance improvements or regressions over time.

8. Incremental Testing

Perform performance testing incrementally throughout the development lifecycle rather than waiting until the end. This allows you to identify and address performance issues early, iterate on performance improvements, and ensure that performance considerations are integrated into each development iteration.

9. Collaborative Approach

Foster collaboration between developers, testers, and other stakeholders throughout the performance testing process. Encourage open communication, knowledge sharing, and cross-functional collaboration to ensure that performance testing aligns with business goals, user expectations, and technical requirements.

10. Continuous Optimization

Continuously optimize the performance of your application based on performance testing results and user feedback. Implement performance improvements iteratively, monitor the impact of changes on performance metrics, and prioritize optimization efforts based on business value and user impact.

Popular Software Performance Testing Tools

Here are some of the popular software performance testing tools used to automate the testing process.

Akamai CloudTest

Akamai CloudTest offers a comprehensive platform for performance testing, enabling users to simulate real-world traffic conditions and analyze application performance across various environments. With features such as load testing, stress testing, and scalability testing, CloudTest empowers teams to identify and address performance issues proactively.

BlazeMeter

BlazeMeter is a cloud-based performance testing platform that provides robust capabilities for load testing, performance monitoring, and continuous testing. With support for various testing protocols and integrations with popular CI/CD tools, BlazeMeter streamlines the performance testing process and facilitates collaboration among development and testing teams.

JMeter

Apache JMeter is an open-source performance testing tool widely used for load testing, stress testing, and performance monitoring of web applications. With its intuitive GUI and extensive feature set, JMeter allows users to create and execute complex test scenarios, analyze performance metrics, and generate comprehensive test reports.

LoadRunner

LoadRunner, developed by Micro Focus, is a market-leading performance testing tool known for its scalability, versatility, and advanced scripting capabilities. With support for a wide range of protocols and technologies, LoadRunner enables users to simulate real-world user behavior, identify performance bottlenecks, and optimize application performance with confidence.

LoadStorm

LoadStorm is a cloud-based load testing tool designed to simulate high traffic volumes and measure application performance under stress. With its easy-to-use interface and flexible pricing options, LoadStorm allows users to create realistic test scenarios, execute tests from multiple geographic locations, and analyze performance metrics in real time.

NeoLoad

NeoLoad is a performance testing solution that offers advanced features for load testing, stress testing, and scalability testing of web and mobile applications. With its intuitive design, built-in test design accelerators, and real-time monitoring capabilities, NeoLoad enables teams to optimize application performance and deliver superior user experiences.

Leverage CrossAsyst’s QA and Test Automation Expertise

As a custom software development company with more than a decade of experience across business verticals, we at CrossAsyst understand the importance of a robust QA and testing process.

Our extensive capabilities in QA and test automation are crafted to guarantee the superior quality of your software solutions. Covering performance and security testing, our proficient team utilizes advanced techniques and state-of-the-art tools to provide dependable, top-notch software that surpasses your expectations.

Our team uses some of the most advanced testing tools, including JMeter, BlazeMeter and LoadRunner. Our streamlined testing protocol ensures your custom software is optimized to perform flawlessly under all conditions.

Book a meeting with CrossAsyst today, and let us work together to bring your software vision to life.