Data plays an important role in testing the quality of software applications. The process of validating data to ensure its accuracy, completeness, and consistency is known as data testing. This blog post delves into the world of data testing, highlighting its significance, various types, and best practices.

Table of Contents

Data Testing and Its Importance in Data Analytics

Data testing is a comprehensive process used to check and ensure the quality of data in databases, data warehouses, and data lakes. It involves various methodologies and processes to validate both the data itself and the data handling mechanisms.

The importance of data testing in data analytics cannot be overstated, as it directly impacts the reliability of insights derived from data analyses. Accurate and reliable data is the backbone of sound decision-making processes, enabling organizations to strategize effectively and optimize operations.

Importance of Data Reliability

Data reliability and accuracy are critical in decision-making. Inaccurate data can lead to erroneous decisions, potentially causing financial loss, reputational damage, and operational inefficiencies. Reliable data, on the other hand, ensures that businesses can trust the insights generated from their data analytics processes, leading to better-informed strategies and actions.

Why Data Testing Matters

The importance of data testing in software testing cannot be overstated. However, let us begin by talking about the risks involved with inaccurate data.

Financial Repercussions

Flawed data can lead to misguided decisions, resulting in significant financial losses. For instance, inaccuracies in financial forecasting or customer data can lead companies to allocate resources inefficiently, pursue unprofitable ventures, or miss out on lucrative opportunities.

Reputational Damage

The impact of erroneous data extends beyond tangible financial losses to affect a company’s reputation. In an era where data breaches are highly publicized, any mishandling of data due to inaccuracies can erode trust among customers and stakeholders, potentially leading to a loss of business.

Operational Inefficiencies

Inaccurate data can cause operational bottlenecks, leading to inefficiencies and wasted effort. When teams base their workflows and strategies on unreliable data, the resultant actions may not only be futile but can also divert attention from critical issues, compounding inefficiencies.

Let’s also take a quick look at the role accurate data plays in the decision making process.

Foundation of Trustworthy Decisions

Data testing plays a pivotal role in validating the assumptions underlying data models and analyses. By rigorously testing data, teams can ensure that the foundations of their decision-making processes are solid, making for more accurate predictions and strategies.

Upholding Data Integrity

Maintaining the integrity of data through its lifecycle is paramount. Data testing ensures that from collection to analysis, data remains consistent, accurate, and free from corruption. This is crucial in environments where data is constantly being updated, migrated, or transformed.

Improved Data Quality

Data testing directly contributes to the enhancement of data quality by identifying and rectifying inaccuracies before they can impact analytics processes. High-quality data is indispensable for generating reliable insights, making data testing an integral part of any analytics strategy.

Confidence in Analytics Outcomes

With data testing, businesses can place greater confidence in their analytics outcomes. Knowing that the data underlying these analyses has been rigorously tested for accuracy and integrity allows businesses to make informed decisions more swiftly and with greater certainty.

Continuous Improvement

Data testing is not a one-off task but a continuous process that feeds into the larger cycle of analytics. By regularly testing data, companies can adapt to changes more effectively, continuously improve their analytics processes, and stay ahead of emerging challenges.

Key Components of Data Testing

There are three key components in data testing. Here’s a more detailed look at each of them.

Data Validation

Data validation stands as a critical first line of defense in maintaining high-quality data. This process involves a series of checks and balances to ensure that incoming data adheres to predefined standards and quality benchmarks. It encompasses various dimensions, as shown below.

- Accuracy: Validation ensures that data accurately represents real-world values or conditions, free from errors or distortions.

- Relevance: It assesses whether the data is suitable and pertinent for the intended use, helping to avoid the incorporation of irrelevant information that could skew analytics outcomes.

- Format: Proper formatting is crucial for compatibility and usability across different systems and processes. Data validation includes verifying formats such as dates, numerical values, and text strings to ensure they meet specific formatting rules.

- Completeness: This aspect of validation checks for missing or incomplete data entries, which are critical for comprehensive analysis and accurate decision-making.

Model Validation

Model validation plays a pivotal role in ensuring the reliability of predictive data models and simulations.

- Accuracy and Predictive Performance: Model validation tests the accuracy of data models in predicting outcomes based on input data. It involves comparing model predictions against known outcomes to evaluate performance.

- Representation of Real-World Processes: This process ensures that models accurately represent the complexities and nuances of real-world processes they aim to simulate, taking into account variables, conditions, and dynamics that affect outcomes.

- Robustness and Generalizability: Model validation also assesses the robustness of models, ensuring they perform well across a variety of conditions and datasets. This is crucial for generalizing model predictions beyond the initial training data.

- Iterative Improvement: Through model validation, weaknesses in models can be identified, providing opportunities for refinement and improvement. This iterative process helps in tuning models to achieve higher accuracy and reliability.

Data Transformation Accuracy

Data transformation, a key process in preparing data for analysis, involves converting data from its original form into a format more suitable for specific analytics tasks. Ensuring the accuracy of these transformations is vital for maintaining data integrity.

- Correctness of Operations: This includes verifying that operations such as sorting, aggregation, summarization, and normalization have been executed correctly, without introducing errors or distortions.

- Data Integrity Post-Transformation: It’s crucial to ensure that the data maintains its integrity after transformation. This means that relationships within the data, as well as its overall structure, remain intact and accurate.

- Impact on Subsequent Analyses: The accuracy of data transformation directly influences the outcomes of subsequent analyses. Inaccurate transformations can lead to misleading insights, making it imperative to validate these processes thoroughly.

- Automation and Repeatability: With the increasing scale of data processing, ensuring that transformation processes are both automated and repeatable while maintaining high accuracy becomes essential. This not only streamlines workflows but also reinforces the reliability of data through its lifecycle.

Data Testing Methods

Let’s now take a look at the three most commonly used data testing methods to include the purpose of each, implementation strategies and real-world examples of their usage.

1. Structural Testing (Schema Testing)

Structural testing is aimed at validating the architecture of databases and data warehouses. It focuses on ensuring that the database schema correctly defines data types, relationships, constraints, and indexes, which are crucial for data integrity and performance.

Implementation Strategies

This involves creating test cases to verify each element of the database schema. Tools can be employed to automatically detect deviations from expected structures, such as incorrect data types or missing indexes.

Benefits

Ensures the foundational integrity of data storage systems, facilitating efficient data retrieval and storage, and preventing data corruption or loss.

Real-World Example

Consider a financial application that manages user transactions. Structural testing would ensure that transaction amounts are stored as decimal types rather than integers to preserve accuracy, and that foreign keys correctly link transactions to user accounts, safeguarding against data anomalies.

2. Functional Testing

Functional testing assesses the correctness of business logic applied to data. This encompasses testing data manipulation functions, such as calculations, data imports/exports, and the implementation of business rules within software applications.

Implementation Strategies

Test cases are designed to mirror typical and edge-case scenarios that the application might encounter in real-world operation. Automated testing frameworks can simulate data inputs and verify if outputs match expected results based on the application’s business logic.

Benefits

Guarantees that the application processes data correctly according to defined business rules, ensuring reliability and accuracy in its outputs.

Real-World Example

In an e-commerce platform, functional testing could involve verifying that the platform correctly calculates total order costs, including taxes and discounts, under various scenarios (e.g., different customer locations, promotional codes).

3. Non-Functional Testing

This testing focuses on the system’s operational aspects, such as performance, security, and scalability, which do not involve specific business functionalities but are critical for the system’s overall reliability and user experience.

Implementation Strategies

- Data Security Testing: Employ penetration testing and vulnerability scanning to identify security weaknesses that could lead to data breaches.

- Performance Testing: Use tools to simulate high volumes of traffic or data processing to assess the system’s performance under load.

- Scalability Testing: Evaluate how well the system can handle increasing amounts of data or concurrent users.

Benefits

Ensures that the system is robust, secure, and capable of handling its intended load without compromising on performance or data integrity.

Real-World Example

For a social media analytics tool, non-functional testing would involve assessing how quickly the system can process and display insights derived from millions of posts during high-traffic events (for example, a major sporting event), while also ensuring user data is protected against unauthorized access.

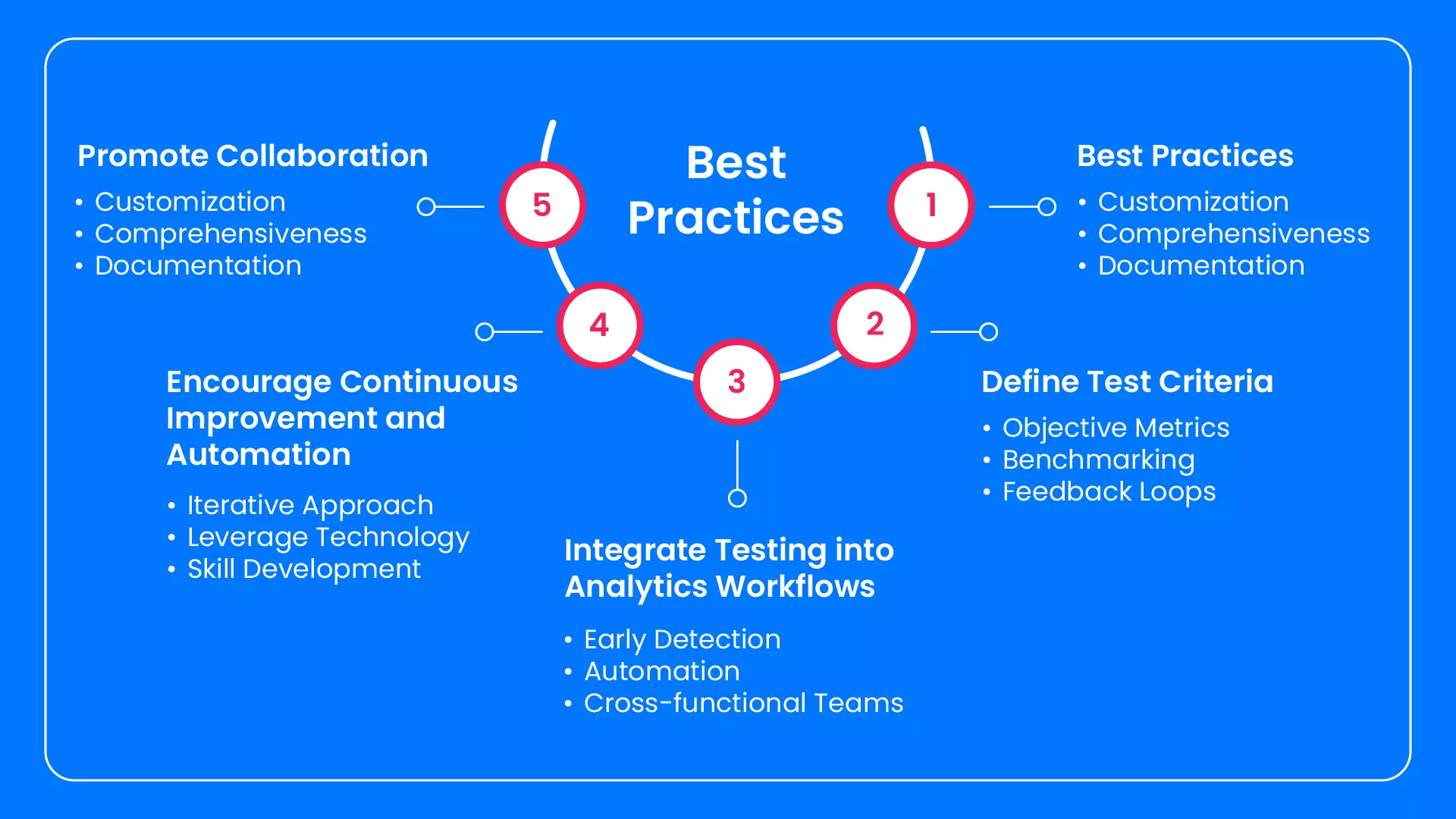

Data Testing Best Practices

Ensuring the integrity and reliability of data through effective testing practices is crucial for organizations that rely on data analytics. Implementing these best practices can significantly enhance the quality of data testing processes.

1. Establish a Testing Framework

A structured testing framework serves as the blueprint for all data testing activities. It should cover every phase of the data lifecycle, from collection and storage to analysis and reporting.

- Customization: Tailor the framework to fit the unique data environments, regulatory requirements, and business objectives of your organization.

- Comprehensiveness: Include guidelines for various types of testing, such as structural, functional, and non-functional, ensuring a thorough evaluation of data systems.

- Documentation: Document the framework clearly, making it accessible to all stakeholders involved in the data management process, thus facilitating consistency and transparency.

2. Define Test Criteria

Establishing clear, objective criteria for what constitutes successful and unsuccessful testing outcomes is vital for assessing data quality and system functionality.

- Objective Metrics: Develop quantifiable metrics that can be measured during testing to evaluate success or identify failure points.

- Benchmarking: Use industry standards or historical data performance as benchmarks for setting test criteria, aiding in the objective assessment of data quality and system performance.

- Feedback Loops: Implement mechanisms for regularly reviewing and updating test criteria based on new insights, technological advancements, or changes in business strategy.

3. Integrate Testing into Analytics Workflows

Embedding data testing processes within analytics workflows ensures that data quality is maintained throughout the analytics lifecycle, enhancing the reliability of insights derived from data.

- Early Detection: By integrating testing early in the analytics workflows, issues can be identified and rectified before they impact downstream processes, saving time and resources.

- Automation: Utilize automated testing tools to conduct routine tests throughout the analytics processes, ensuring continuous data quality without manual intervention.

- Cross-functional Teams: Involve teams across functions in the testing process, from data engineers to analysts, to ensure that data quality is a shared responsibility and is maintained across all stages of analytics.

4. Promote Collaboration

Fostering a collaborative environment among data scientists, IT professionals, and business analysts ensures that data quality and integrity are viewed from multiple perspectives, enriching the testing process.

- Interdisciplinary Teams: Create teams composed of members from different disciplines to bring diverse perspectives to the testing process, enhancing problem identification and solution generation.

- Communication Channels: Establish open and effective communication channels to facilitate the sharing of knowledge, challenges, and insights among team members.

- Collaborative Tools: Leverage collaborative tools and platforms that enable seamless sharing of data, test results, and documentation, supporting a unified approach to data quality.

5. Encourage Continuous Improvement and Automation

Adopting a mindset of continuous improvement, supported by automation, can significantly increase the efficiency and effectiveness of data testing processes.

- Iterative Approach: Embrace an iterative approach to testing, where processes are regularly evaluated and refined based on outcomes, feedback, and emerging best practices.

- Leverage Technology: Invest in automation tools and technologies that can streamline testing processes, reduce manual errors, and free up human resources for more complex analysis and decision-making tasks.

- Skill Development: Encourage ongoing learning and development in the areas of data testing and automation among team members, ensuring that the organization stays at the forefront of industry advancements and maintains a competitive edge.

Conclusion

Data testing plays a vital role in software testing. These various data testing methods allow organizations to get unprecedented insights into their operational realities, and allows them to make more educated decisions. Our testing team at CrossAsyst recognizes the value data has to businesses like yours, and has the expertise to enable accurate data visualization and data-driven decisions. Learn more about how we have helped our clients around the world with their data needs by booking a call with us today!